Last week of the Data & AI MVP Challenge. To end it all in style, I had the chance to come back to text analysis APIs, I discovered Azure's cognitive search solution, I built bots and finally I did an overview of several aspects surrounding accessibility. Here are my findings, happy reading!

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part is available here: Part 4

Entity recognition

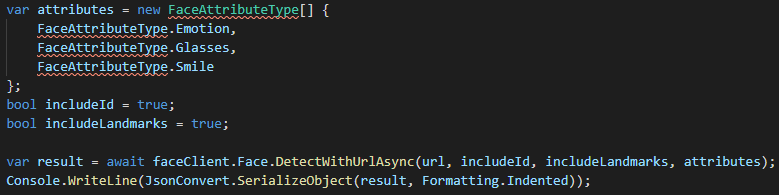

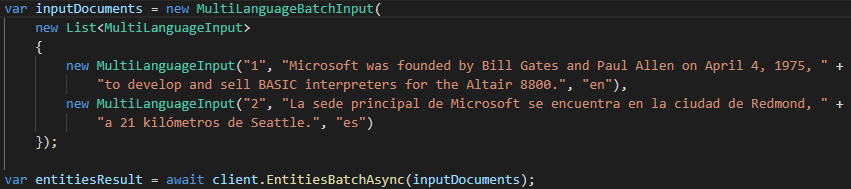

The recognition of entities, which is part of the API Text Analytics, allows us to extract a list of entities or items that the service recognizes, being able to even return us links on these entities if possible (for example a link towards the page of Wikipedia). Since this API is already in place, all we have to do once we have our Azure resource for cognitive services is call it. If we use the SDK already available for C#, a call to this service will be as simple as these few lines (with a few instructions upstream to initialize the client):

The API is made to receive JSON as a parameter, something the SDK takes care of doing for us as we use it. In the example above, the result would give us a list containing among others "Microsoft" and "Bill Gates".

In the same genre, there are also APIs and SDKs that allow us to extract key phrases from a text or to detect the language from the received text. As for the language detection, one can very well imagine the use with a bot who needs to know what language a person is speaking in to be able to answer the right thing, in the right language.

Azure Cognitive Search

Cognitive research is a tool that allows us to build an indexing and query solution from several data sources to have a large-scale search solution. In other words, we can take multiple data sources, extract what we need for research and preserve it in an index that will be represented as JSON documents and therefore very easy to search.

Unlike a "classic" search, this search allows the use of cognitive services to return results to us based on their relevance and not only on the fact that the sought value is found in a field. We can even add a suggest, which will allow suggestions while writing and auto-completion.

To configure a search service, you can go through the Azure web interface or even go there directly via the code. Personally, I found that the web interface was easier to learn about the subject, which can be relatively imposing when you don't know all that it allows.

The bots

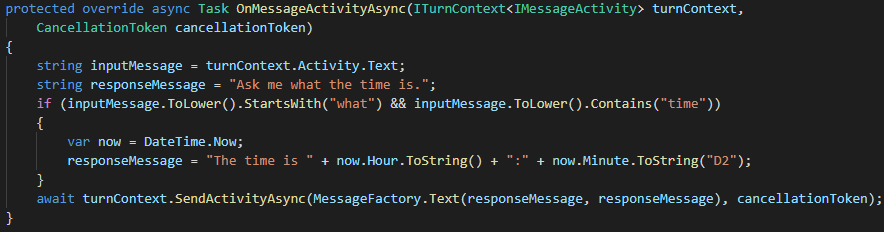

While I had briefly seen how to create a bot, this time it was in construction that I had the chance to learn. I first explored the Bot Framework SDK, which allows you to create a bot via a C# project. Essentially, you have to make a override methods that we want to redefine behavior. For example, this method that allows you to answer what time it is when a question with the word time is registered:

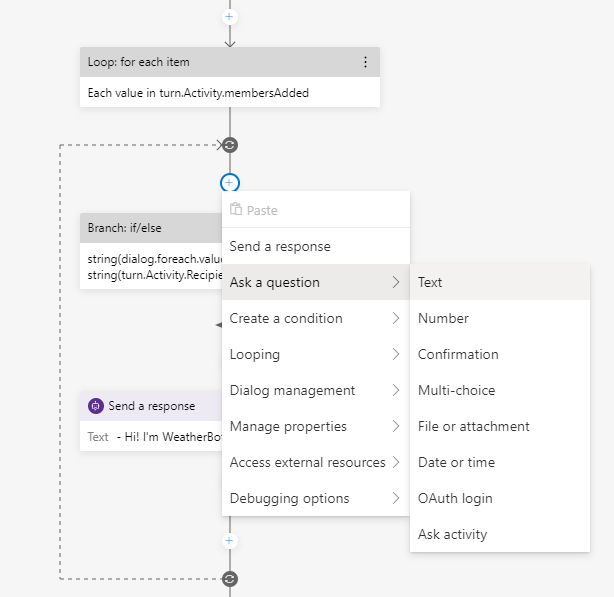

The other way to create a bot is with the help of the Bot Framework Composer. This tool, which must be installed locally on our computer, allows us to compose the different dialogues thanks to an interface where we add different actions, questions or reactions to be taken according to what has been entered by the user. To give a slight insight, here is part of a bot that I created with the menu to add a question:

Accessibility

Accessibility is at the heart of many artificial intelligence services. A sentence that resonated with me a lot while doing the learning said that when we don't consider accessibility, we have the power to exclude people. This should therefore be at the heart of our concerns as a developer, in a hasty way in our projects rather than at the end, often done halfway.

In these few modules, I learned among other things about the existence of Accessibility Insights, which allows us to detect accessibility problems on our applications, both mobile, web and desktop. On the side of the suite Office, one thing I didn't know about is PowerPoint, where you can use a Presenter Coach which is a service that uses AI to give us feedback on our presentation and offer us recommendations. Most products also include tools that can help us identify and correct accessibility issues, such as missing alt text in an image in an email.

Conclusion

The month of May was extremely busy for me in learning, with an exam for a certification and another (Azure AI-102) that I will continue to prepare in the coming weeks, because in my opinion it is a little more difficult than the AI-900. I am giving you the links to my learning module collections, which have been enhanced with certain modules from my last week. I am also in preparation for an episode of Bracket Show for a demonstration of some of the things I saw during the month.

If you have any comments or questions on all of these learnings, or the related certifications, please let me know in the comments.

Collection on Data & AI: See the collection

Collection on the Machine Learning: See the collection

Collection on image analysis: See the collection

Word Analysis Collection: See the collection

Bruno

MVP Challenge

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part is available here: Part 4