In recent months, it has now been possible to deploy Blazor applications on Azure Static Web App, a much lighter environment than App Services. In this blog complementary to the video recently published on our channel, we look at how to make these deployments via DevOps.

Continue reading « Déployer une application Blazor sur Azure Static Web App avec DevOps »Tag: azure

Start an Azure build by API

Did you know that it is possible to start a build Azure by API? It's not something that we need every day, but knowing that it exists, and that we have a lot of possibilities with Azure APIs, can make our life easier when the time comes.

Continue reading « Démarrer un build Azure par l’API »Azure Data & AI MVP Challenge - Video

The Azure Data & AI MVP Challenge is now behind me, with several learnings, a certification and a few articles on the subject. So here is a video from The Bracket Show in which we walked through some of the features that I had the chance to learn during this month.

Continue reading « MVP Challenge Azure Data & AI – Vidéo »Azure Data & AI MVP Challenge - Part 5

Last week of the Data & AI MVP Challenge. To end it all in style, I had the chance to come back to text analysis APIs, I discovered Azure's cognitive search solution, I built bots and finally I did an overview of several aspects surrounding accessibility. Here are my findings, happy reading!

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part is available here: Part 4

Entity recognition

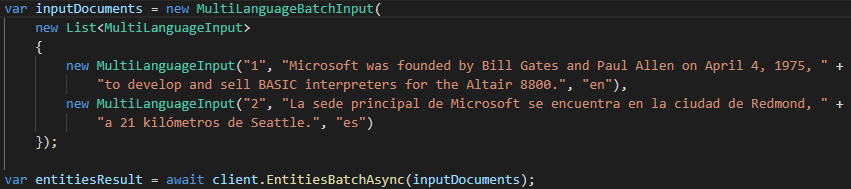

The recognition of entities, which is part of the API Text Analytics, allows us to extract a list of entities or items that the service recognizes, being able to even return us links on these entities if possible (for example a link towards the page of Wikipedia). Since this API is already in place, all we have to do once we have our Azure resource for cognitive services is call it. If we use the SDK already available for C#, a call to this service will be as simple as these few lines (with a few instructions upstream to initialize the client):

The API is made to receive JSON as a parameter, something the SDK takes care of doing for us as we use it. In the example above, the result would give us a list containing among others "Microsoft" and "Bill Gates".

In the same genre, there are also APIs and SDKs that allow us to extract key phrases from a text or to detect the language from the received text. As for the language detection, one can very well imagine the use with a bot who needs to know what language a person is speaking in to be able to answer the right thing, in the right language.

Azure Cognitive Search

Cognitive research is a tool that allows us to build an indexing and query solution from several data sources to have a large-scale search solution. In other words, we can take multiple data sources, extract what we need for research and preserve it in an index that will be represented as JSON documents and therefore very easy to search.

Unlike a "classic" search, this search allows the use of cognitive services to return results to us based on their relevance and not only on the fact that the sought value is found in a field. We can even add a suggest, which will allow suggestions while writing and auto-completion.

To configure a search service, you can go through the Azure web interface or even go there directly via the code. Personally, I found that the web interface was easier to learn about the subject, which can be relatively imposing when you don't know all that it allows.

The bots

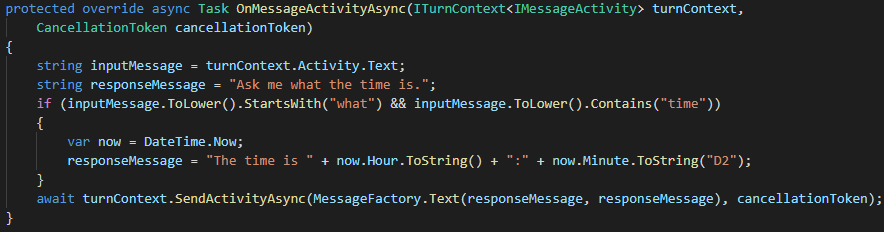

While I had briefly seen how to create a bot, this time it was in construction that I had the chance to learn. I first explored the Bot Framework SDK, which allows you to create a bot via a C# project. Essentially, you have to make a override methods that we want to redefine behavior. For example, this method that allows you to answer what time it is when a question with the word time is registered:

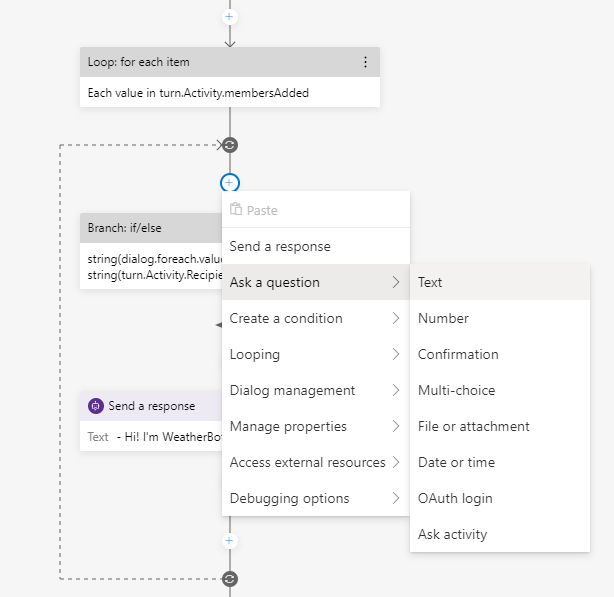

The other way to create a bot is with the help of the Bot Framework Composer. This tool, which must be installed locally on our computer, allows us to compose the different dialogues thanks to an interface where we add different actions, questions or reactions to be taken according to what has been entered by the user. To give a slight insight, here is part of a bot that I created with the menu to add a question:

Accessibility

Accessibility is at the heart of many artificial intelligence services. A sentence that resonated with me a lot while doing the learning said that when we don't consider accessibility, we have the power to exclude people. This should therefore be at the heart of our concerns as a developer, in a hasty way in our projects rather than at the end, often done halfway.

In these few modules, I learned among other things about the existence of Accessibility Insights, which allows us to detect accessibility problems on our applications, both mobile, web and desktop. On the side of the suite Office, one thing I didn't know about is PowerPoint, where you can use a Presenter Coach which is a service that uses AI to give us feedback on our presentation and offer us recommendations. Most products also include tools that can help us identify and correct accessibility issues, such as missing alt text in an image in an email.

Conclusion

The month of May was extremely busy for me in learning, with an exam for a certification and another (Azure AI-102) that I will continue to prepare in the coming weeks, because in my opinion it is a little more difficult than the AI-900. I am giving you the links to my learning module collections, which have been enhanced with certain modules from my last week. I am also in preparation for an episode of Bracket Show for a demonstration of some of the things I saw during the month.

If you have any comments or questions on all of these learnings, or the related certifications, please let me know in the comments.

Collection on Data & AI: See the collection

Collection on the Machine Learning: See the collection

Collection on image analysis: See the collection

Word Analysis Collection: See the collection

Bruno

MVP Challenge

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part is available here: Part 4

Azure Data & AI MVP Challenge - Part 4

This week, after seeing the basics of artificial intelligence, the MVP Challenge took me to a bit more advanced terrain of Data & AI compared to different cognitive services that can be used through Azure. Here is a summary of what I learned!

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part: you are here

The fifth part is available here: Part 5

Azure Content Moderator

It all started with a service that I had not yet seen, which allows moderation of images, texts and videos, assisted by the AI of course. This service makes it possible to detect profane or explicit items for example and can even return us a classification on the explicit elements, indicating to us whether we should do a review of the content. In a world where the workforce is often overwhelmed, this kind of service can ease the burden on people to only moderate what is deemed offensive. As everything can be used via an API, it is rather simple to set up, a very complete example to try it out is also available here.

Sentiment analysis

Another great discovery is sentiment analysis using the Text Analytics API. I had seen briefly in a previous lesson how this works, but this time I saw it in a much more concrete example. I saw how to put a system of tails in places with the Azure Queue Storage and with a Azure Function to receive messages, determine whether they are positive, negative or neutral, then transfer depending on the result to the correct queue of the Queue Storage.

Speech Service

Again something that I had seen earlier in the month, but more concretely using different APIs to consume the services. In converting audio to text, as in translating, API usage is much the same. We build the object we need (a SpeechRecognizer to make speech-to-text for example) from a SpeechConfig to connect to the service on Azure, from a AudioConfig to choose an audio file instead of the microphone for example, then all that remains is to consume the result of the method used, which takes care of calling the cognitive service which does the work.

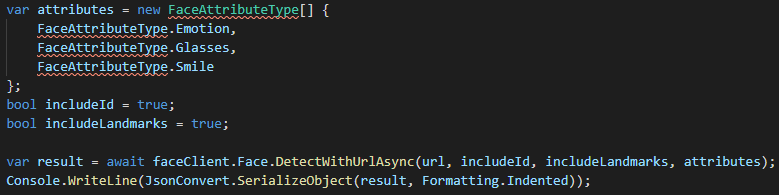

Image and video analysis

I also returned to the analysis of images on different aspects, among others with the API face. To see that the use can be done thanks to SDKs makes the use much more easily accessible. For example, these few lines of code make it possible to detect emotions, glasses, smiles as well as the different identification points (landmarks) on an image:

Video analysis is something that I hadn't seen before and that brings together a lot of the concepts of cognitive services. In summary, the service Azure Video Indexer allows you to take videos and extract scenes from them, face IDs, text recognition, and even transcription and analysis of emotions in audio. In the end, we end up with a ton of information that would allow us, for example, to do research on videos according to their content.

Language Understanding

From this side, I had the chance to see a few more concepts than what I had written last week. For example, there are pre-built domains that we can add to our projects LUIS with a few clicks, which will take care of adding a large selection of intentions and entities to our model without us having to do it ourselves.

I also saw that it was easy to export an application LUIS to a docker, with an option available directly in the export menu. An important thing to know with this though is that there are some limitations to deploying an app. LUIS in a container, all of which are listed in the documentation.

Conclusion

This week was much busier in terms of using the different APIs, but extremely interesting because beyond understanding the concepts seen in the last few weeks, I was able to see the ease of use with the different SDKs available. I don't have a new learning collection this week, instead I updated the ones I already had, so the best way to see the added items would be to check them out:

Collection on Data & AI: See the collection

Collection on the Machine Learning: See the collection

Collection on image analysis: See the collection

Word Analysis Collection: See the collection

Bruno

MVP CHALLENGE

The first part is available here: Part 1

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part: you are here

The fifth part is available here: Part 5

Azure Data & AI MVP Challenge - Part 3

It has been two weeks since I started the Azure Data & AI MVP Challenge. After exploring the machine learning and image analysis, the last week was more related to the analysis of texts, speech, natural language understanding and construction of bot for online help. Again, here is a summary of what I learned over the past week.

The first part is available here: Part 1

The second part is available here: Part 2

The third part: you are here

The fourth part is available here: Part 4

The fifth part is available here: Part 5

Text analysis

First of all, what is text analysis. It is a process whereby an artificial intelligence algorithm evaluates the attributes of a text to extract certain information. As text analyzes are made very sophisticated, i.e. they are able to understand according to the semantics of words, and not just a word-for-word translation, it is generally better to use services like the Text Analytics on Azure rather than programming it ourselves, especially considering that it is only a matter of creating the resource on Azure and then using the endpoint exposed.

Thanks to this kind of analysis, we can determine the language of a text, but also more advanced things such as the detection of sentiment in a text. This kind of analysis could, for example, make it possible to determine whether a review is positive or negative on a transactional site and even allow us to react more quickly. We would also be able to extract points of interest from a document.

Speech recognition and synthesis

Whether it's with our smart speakers or our mobile, we use this feature every day. This is the process of interpreting audio (our voice for example) and then converting it all into text that can be interpreted. Synthesizing is rather the reverse of this process, that is, it will take text to convert it to audio, with input as the text to say and the choice of voice to use.

Like everything I am currently exploring on AI with Azure, everything is in place on this side to offer us a service that is already trained for this. With the resource Speech (or the Cognitive Services if we use several features), we can access the APIs Speech-to-Text or Text-to-Speech to get the job done via a endpoint.

Text or speech translation

Again something that we use frequently in our daily life is translation. We are a long way from what it used to be now that semantics are considered for translations. One thing I learned about the service Translator Text Azure, but that doesn't surprise me, is that there are over 60 languages supported for doing text-to-text. You can also add filters to avoid vulgar words or add tags on content that you don't want to translate, like the name of a company.

Natural language comprehension

The Language Understanding, it's the art of taking what a user says or writes in their natural language and transforming it into action. Three things are essential in this concept. The first is what we call the statements (utterance), ie the sentence to be interpreted. For example the phrase "turn on the light". Then we have the intentions (intention), which represent what we want to do inside the statement. In this example, we would have an “Ignite” intention that would do the task of lighting something. Finally, we have the entities (entities), which are the items referred to in the statements. Still in the same example, it would be "light".

Once you have these concepts, setting it all up is pretty straightforward using LUIS (Language Understanding Intelligent Service). We define a list of statements, intentions and entities, something that can also be done from a predefined list for certain fields. We call this the authoring model. Once it is trained (a simple click of a button), we can publish it and use it predictively in our applications.

Construction of a bot

Cognitive service QnA Maker on Azure is the basis of what allows us to build a bot who will be able to answer automatically questions about an automated support service for example. We build a question-answer bank from which we train the knowledge base. What this does is to apply natural language processing to the questions and answers, to ensure that the system will be able to respond even if it is not phrased exactly as entered.

Once we have this, we just have to build a bot to use this knowledge base. It's possible to use an SDK and jump into some code to do it, but I must admit that I loved just pushing a button in my database. QnA Maker which automatically created me a bot. All I had to do next was use it in my app.

Microsoft Azure AI-900 certification

Once all these lessons were completed, I found that I had done all the lessons to prepare for the exam for the certification Azure AI Fundamentals. So I didn't wait, having spent the last two weeks learning all this, and I scheduled my exam and got my certification. If you follow these lessons on an ongoing basis, I am sure you will also have the chance to take the exam and get your certification, the lessons prepare us really well.

Conclusion

I have added a few lessons to my general lesson collection as well as a new collection for everything related to word analysis which has been mainly my last week. Do not hesitate to let me know in the comments if you are moving towards certification or if you have any questions!

Collection on Data & AI: See the collection

Collection on the Machine Learning: See the collection

Collection on image analysis: See the collection

Word Analysis Collection: See the collection

Bruno

mVP Challenge

The first part is available here: Part 1

The second part is available here: Part 2

The third part: you are here

The fourth part is available here: Part 4

The fifth part is available here: Part 5

Azure Data & AI MVP Challenge - Part 2

Last week, I started the Azure Data & AI MVP Challenge. So here I am a week later as I explored several aspects of the Machine Learning and everything related to image analysis. Here is a summary of my findings.

The first part is available here: Part 1

The second part: you are here

The third part is available here: Part 3

The fourth part is available here: Part 4

The fifth part is available here: Part 5

Azure Data & AI MVP Challenge - Part 1

I recently had the opportunity to participate in a challenge, the MVP Challenge, which is a challenge to explore new avenues. For my part, I chose to complete the challenge in an area that piques my curiosity and for which I have never taken the time to explore: Data & AI. My next articles will therefore be a review of my journey in this adventure, hoping to pique your curiosity too!

The second part is available here: Part 2

The third part is available here: Part 3

The fourth part is available here: Part 4

The fifth part is available here: Part 5